Optimizing SAGE Net: Achieving High Performance with Shorter Input Sequences for Online Inference

Table of Links

Abstract and 1 Introduction

-

Related works

-

Problem setting

-

Methodology

4.1. Decision boundary-aware distillation

4.2. Knowledge consolidation

-

Experimental results and 5.1. Experiment Setup

5.2. Comparison with SOTA methods

5.3. Ablation study

-

Conclusion and future work and References

\

Supplementary Material

- Details of the theoretical analysis on KCEMA mechanism in IIL

- Algorithm overview

- Dataset details

- Implementation details

- Visualization of dusted input images

- More experimental results

4.1. Decision boundary-aware distillation

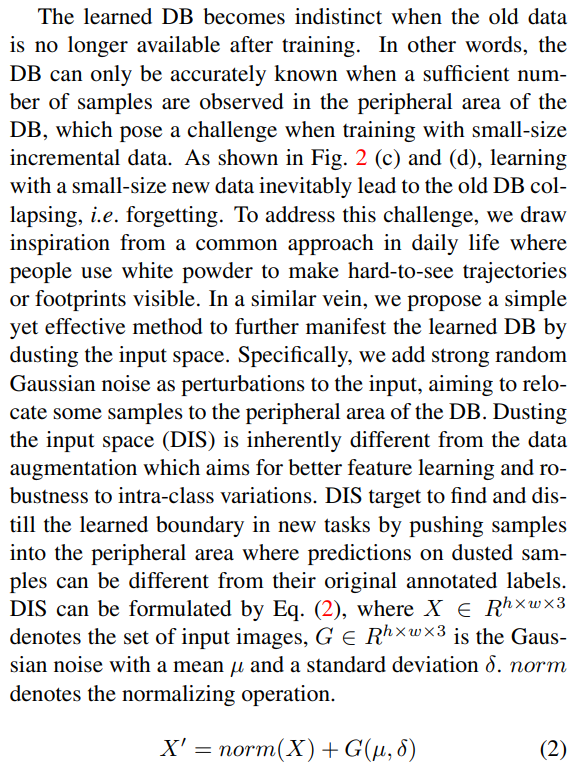

Decision boundary (DB) which reflects the inter-class relationship and intra-class distribution is one of the most valuable knowledge stored in a well-trained model. It can be defined by distinguishing between inner samples (correctly classified) and outer samples (misclassified), as illustrated in Fig. 2 (a). In new IIL, promoting the model’s performance on new data without forgetting equals to extend the existing DB to enclose those new outer samples while retain the DB in other locations. However, to learn from new data, existing methods take the annotated one-hot labels as the optimal learning target for granted. We argue that one-hot labels ignore the relationship between target class and other classes. Naively learning with one-hot labels tends to push outer samples towards the DB center, which can potentially interfere with the learning of other classes, especially when the data is insufficient to rectify such interference in IIL.

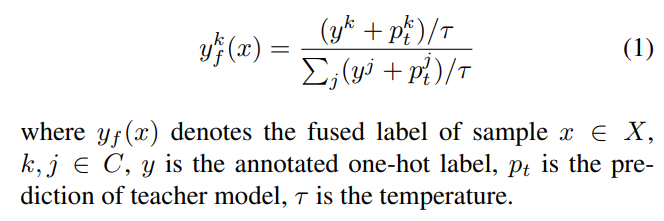

\ To address the inter-class interference, we propose to learn the new data by fusing annotated one-hot labels with predictions of existing model, as show in Eq. (1). For new outer samples, learning with fused labels retains the knowledge on none-target classes and extends the decision boundary more moderately to enclose them. Knowledge of nonetarget classes is crucial for retain learned knowledge, which also reported by Mittal et al. [18] in using super-class labels. For new inner samples, keeping the DB around it is a safer choice, i.e. using existing predicted scores as the learning target. However, we propose to push the DB away from the new peripheral inner samples by sharpening the teacher’s prediction score with the one-hot label, which in essence enlarges the inter-class distance while retaining the DB. Hence, although with different motivation, training on new outer samples and new inner samples are unified through the fused label.

\

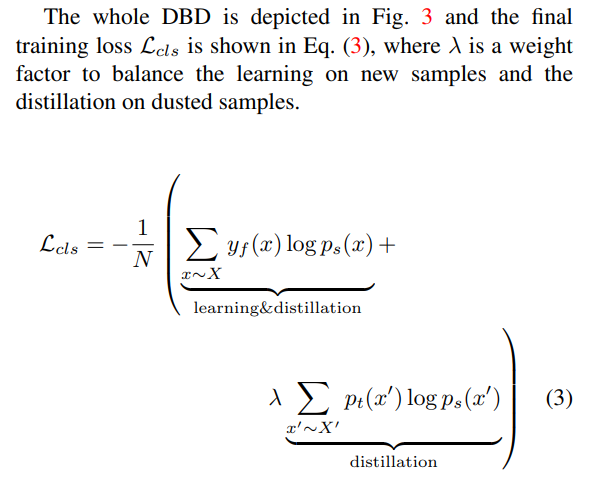

\ Alternating the learning target with fused labels unifies the knowledge learning and retaining on new samples in a simple manner. Although this manner helps retain some learned knowledge, preservation of DB in other directions that only supported by the old data is not addressed.

\

\

\ Decision boundary-aware distillation enables the student network to learning new knowledge with awareness of the existing knowledge.

\

:::info Authors:

(1) Qiang Nie, Hong Kong University of Science and Technology (Guangzhou);

(2) Weifu Fu, Tencent Youtu Lab;

(3) Yuhuan Lin, Tencent Youtu Lab;

(4) Jialin Li, Tencent Youtu Lab;

(5) Yifeng Zhou, Tencent Youtu Lab;

(6) Yong Liu, Tencent Youtu Lab;

(7) Qiang Nie, Hong Kong University of Science and Technology (Guangzhou);

(8) Chengjie Wang, Tencent Youtu Lab.

:::

:::info This paper is available on arxiv under CC BY-NC-ND 4.0 Deed (Attribution-Noncommercial-Noderivs 4.0 International) license.

:::

\

You May Also Like

Wintermute Analysis: Stablecoins Grow While ETFs and DATs Stall in Crypto Markets

China Launches Cross-Border QR Code Payment Trial