The Basic Reasoning Test That Separates Real Intelligence from AI

Table of Links

Abstract and 1. Introduction

1.1 Syllogisms composition

1.2 Hardness of long compositions

1.3 Hardness of global reasoning

1.4 Our contributions

-

Results on the local reasoning barrier

2.1 Defining locality and auto-regressive locality

2.2 Transformers require low locality: formal results

2.3 Agnostic scratchpads cannot break the locality

-

Scratchpads to break the locality

3.1 Educated scratchpad

3.2 Inductive Scratchpads

-

Conclusion, Acknowledgments, and References

A. Further related literature

B. Additional experiments

C. Experiment and implementation details

D. Proof of Theorem 1

E. Comment on Lemma 1

F. Discussion on circuit complexity connections

G. More experiments with ChatGPT

F Discussion on circuit complexity connections

\

\

\ On the other hand, with appropriate setup, deep neural nets, recurrent neural nets, and Transformers with scratchpads are Turing complete. Furthermore, they can simulate a Turing machine using resources polynomial in the number of steps the Turing machine runs for and the input length. So, with appropriate parameters these can efficiently solve any problem that it is possible to solve efficiently. A little more precisely, given a neural net where the input bits are 0 or 1, it is fairly easy to set a neuron to compute an AND, OR, or NOT of one or more previous values, so any circuit can be converted into a neural net of at most equal size. Any efficient computation can be performed by a polynomial-sized circuit, so it can also be performed by a polynomial-sized deep neural net. Also, given a Turing machine in a state where all entries in its tape that are more than n steps away from the head or heads are in their initial state, there is a circuit of depth O(1) and size O(n) that computes the next state of the Turing machine. That means that running a Turing machine for T steps on an input of length n can be simulated by a recurrent neural net of size O(T + n) and T recurrences. Conversely, given a neural net with a reasonable activation function and sub-exponential edge weights, one can estimate the output of each neuron to within an exponentially small error in time polynomial in the size of the net.

\

\

\

\

G More experiments with ChatGPT

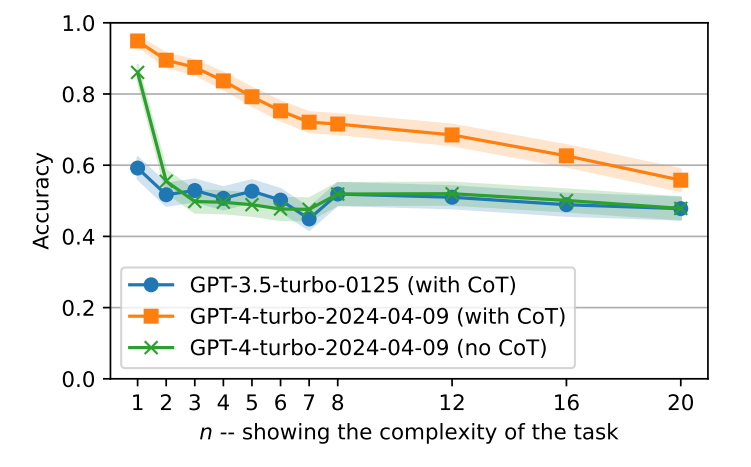

Height comparison. For n ≥ 1, we consider 3n + 2 people having different heights. We give the model 3n + 1 pairwise relations between the consecutive people (in order of height) in a random order. Using this information, one can understand the order of the heights for all people by combining the given information. We ask the model about the relation between person n + 1 and 2n + 2. An example for n = 1 is

\ “Omar is taller than Sara. Vlad is taller than David. Farah is taller than Omar. Sara is taller than Vlad. Is Omar taller than Vlad?"

\ where the answer is true. Note that to answer this question correctly one has to combine at least n + 1 relations. Thus, the locality of the task is always larger than n. (The exact locality would depend on the tokenization.) We found out that ChatGPT (GPT3.5) fails at this task even for n = 1 (simplest case). Note that when working with the GPT3.5 model we used the following prompt so that the model is able to use chain-of-thought reasoning: "You can reason if you want but make sure to include yes/no in your answer." Interestingly, GPT4 performs much better than GPT3.5. We also observed that it is often the case that when GPT4 answers correctly to the question, it orders people based on their height, very similar to what we do in the scratchpad of the graph task. Motivated by this, we tested one more setting where we prompted GPT4 with "Answer only with a yes or no." to avoid the chain-of-thought reasoning. In this case, as expected, the model couldn’t solve the height comparison task for n > 1. The results are shown in Figure 11.

\

\

:::info Authors:

(1) Emmanuel Abbe, Apple and EPFL;

(2) Samy Bengio, Apple;

(3) Aryo Lotf, EPFL;

(4) Colin Sandon, EPFL;

(5) Omid Saremi, Apple.

:::

:::info This paper is available on arxiv under CC BY 4.0 license.

:::

\

You May Also Like

Let insiders trade – Blockworks

XRP price rebounds off lower band as new XRP Ledger wallets surge to 8-month high